(XR Concepting >)

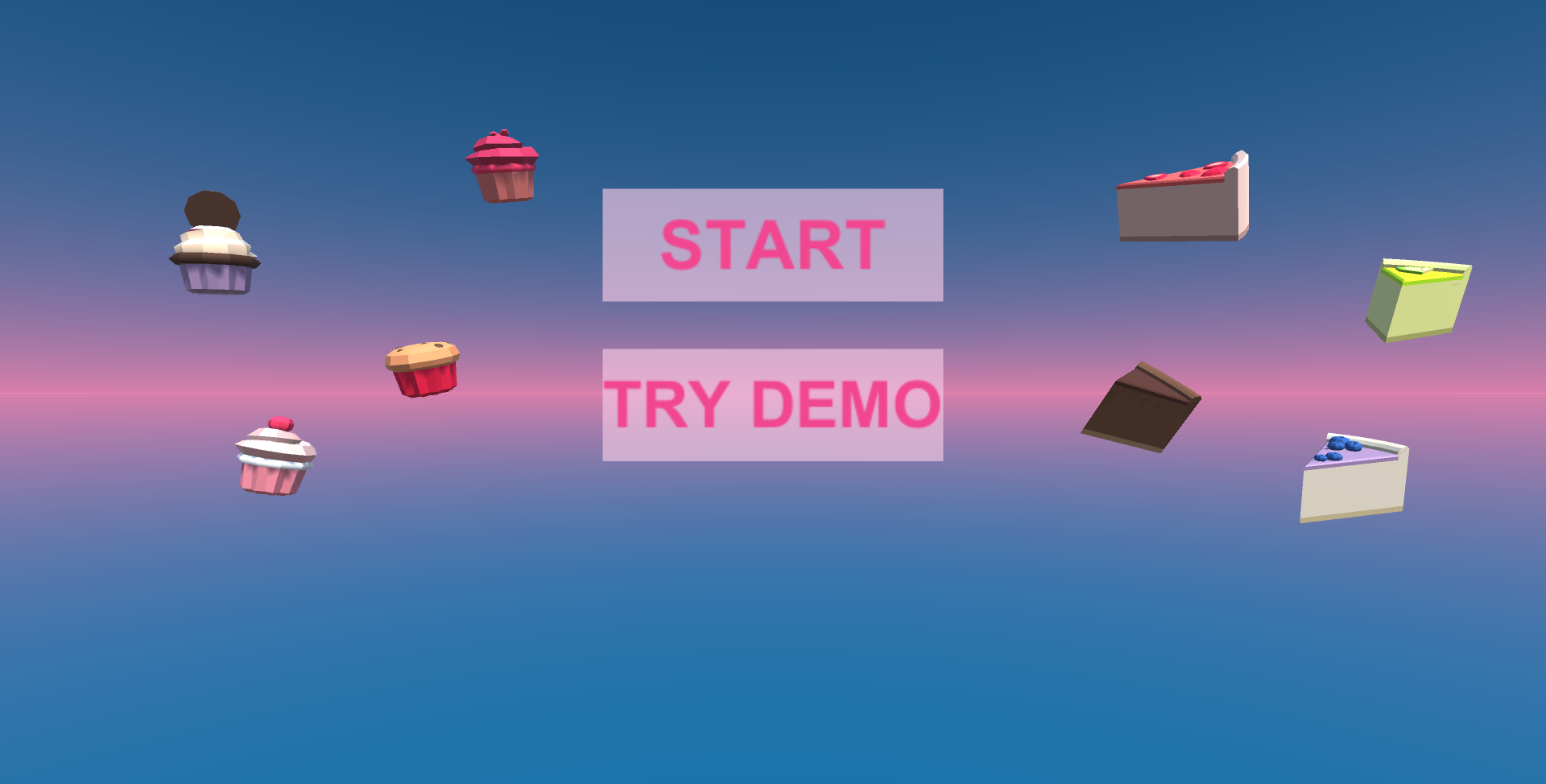

In the second week of the semester we started working on our own projects for the pressure cooker. I started out with a different concept that eventually evolved into what I presented on Friday. This happened naturally by getting feedback and brainstorming. My first idea was based on Wario Ware minigame: link. I thought it would be fun to see animals walking around and then having the player answer questions about them. After talking with a teacher he told me that it would be too much to animate animals for this small prototype and suggested replacing the animals with something like a candyshop where you answer questions about the types of candy you see in the store. This sounded fun and cute to make, but I was still worried that the randomization would be too much. I did start working on a candy store scene because I liked that part a lot.

After setting up Unity to work with my Oculus headset via OpenXR, I did not have a lot of time left because it took a while to have everything working correctly. I used tutorials like this one: link to learn how this works and also to learn how you program things like grabbing objects. I redefined my concept and it became a sorting game with a pivoting board where you slam the board to sort the candies in boxes:

The above is the prototype I tested on Friday. I have a separate post for that: link.

After the testing I took feedback and also changed some things to solve bugs. I added a menu where you can choose the old version (with the pivoting board) and a new version with a static table where you grab the treats and throw them into the boxes. This new version also has a garbage bin where you can throw things that don’t fit in the boxes. I also added music.

How did I make my game (XR Development >)

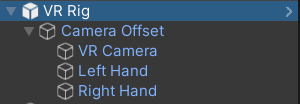

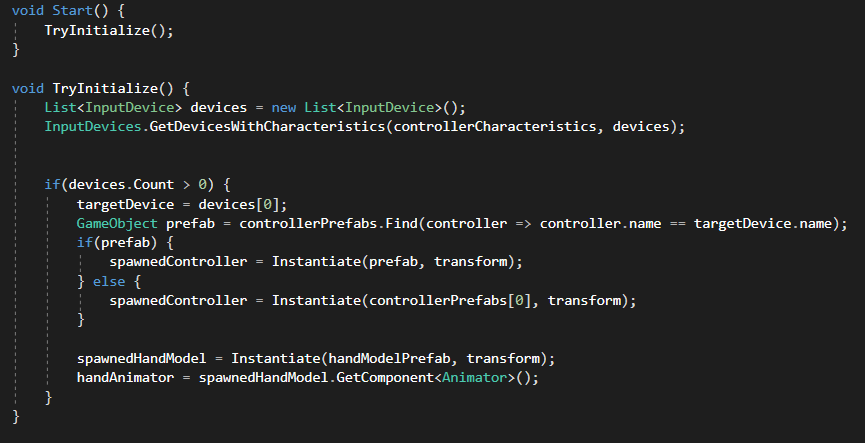

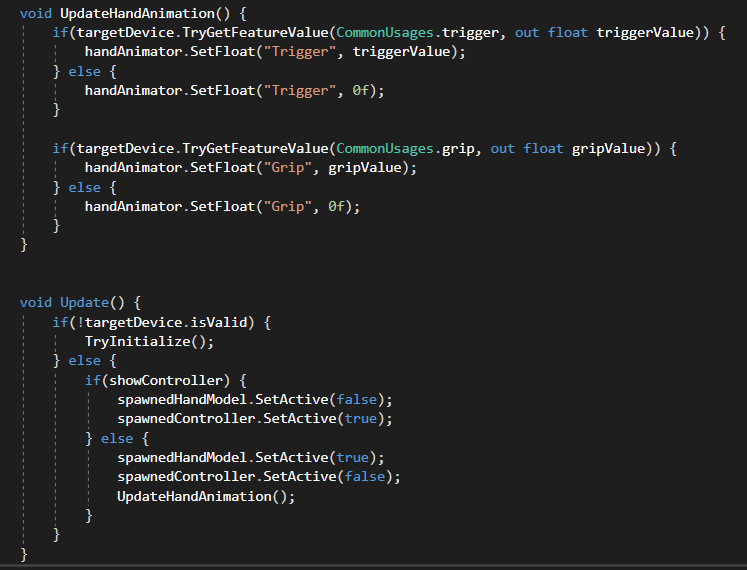

For this project I used OpenXR because this works the best with Oculus Quest 2 and at the beginning of the pressure cooker I still had the idea that I wanted to program hand tracking for the Quest if I had some time left (I did not). To work with OpenXR I installed it via the package manager and the first thing I added was a VR Rig. This defines where the player is in the area. The VR Rig also contains the VR Camera and the hands of the player. For the menu I also added rays which you can use to choose a button to press. The hands also have a script which can spawn either a controller model or a hands model and the hands can be animated based on the buttons you press.

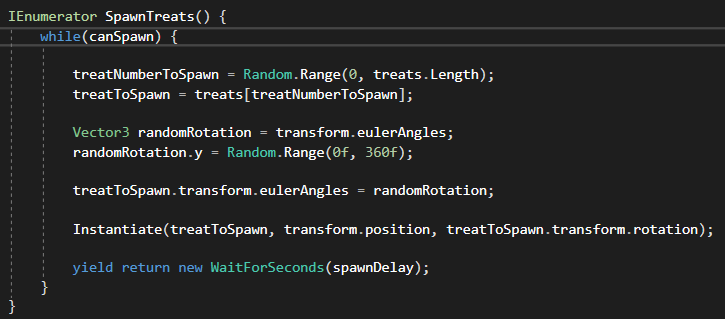

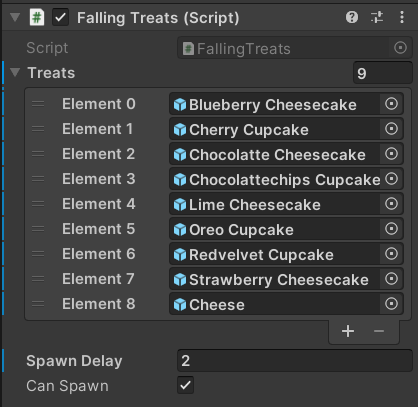

Spawning treats

The code for the spawning is also really simple. I’ve used a coroutine for this that executes every X seconds (this is adjustable via the inspector as well as which treats to spawn).

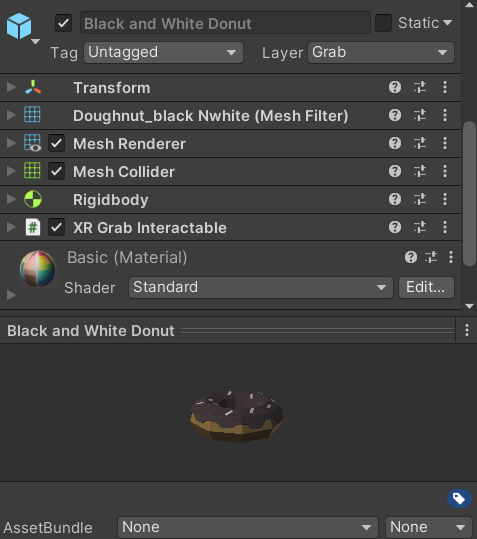

The treats all have a rigidbody and a mesh collider (and in the pivoting version this is the same for the board so you can push it with your virtual hands). The treats also have a XR Grab Interactable which makes them able to be grabbed and thrown by the player (so you can throw them in the boxed).

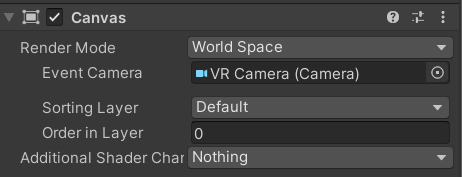

UI and Canvas

For the main menu I learned about UI (also for the text above the boxed). I learned that you have to assign the VR camera to the canvas instead of the normal camera and that you can make it a static object by changing it to world space instead of screen space.

Animations

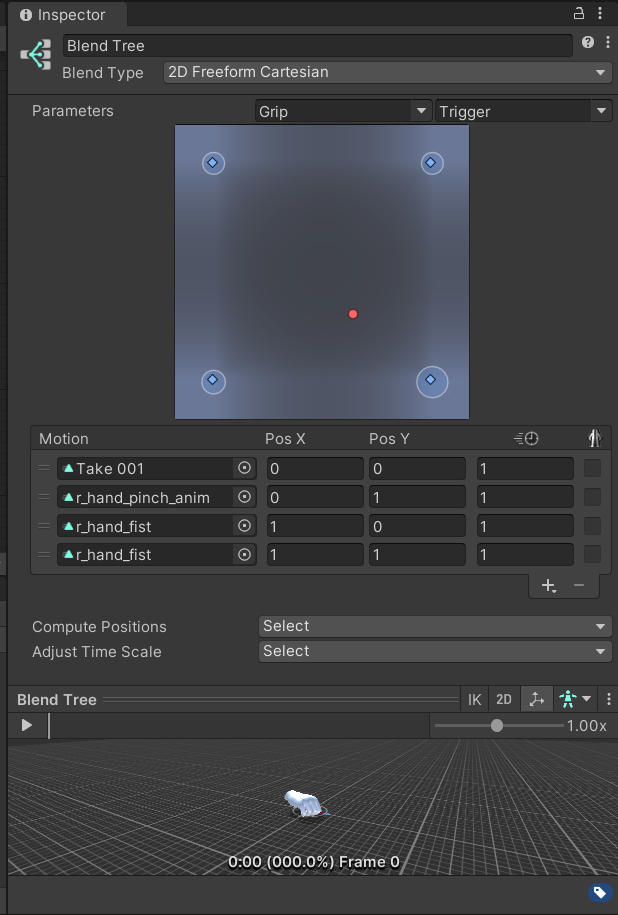

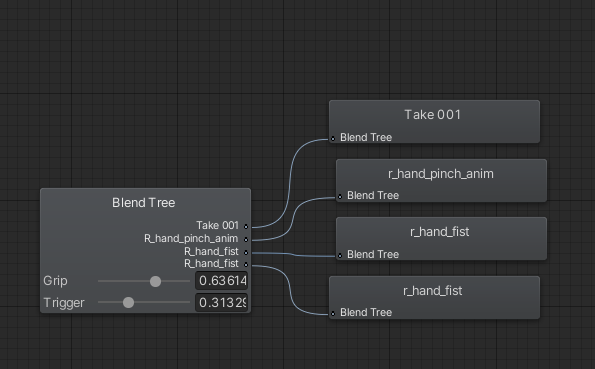

For the animations for the hands I learned something new from the VR videos on YouTube I mentioned earlier. It is using a blend tree to blend between animations like not pressing the trigger completely results in only curling fingers instead of a fist.

What did I learn?

I learned a lot from this pressure cooker: I learned how to set up a VR game (for Oculus) and how things like a canvas work differently in VR versus a normal game. I also learned a new type of animation where things flow over into eachother so that you can have in between stages of the animation where for example your hand is going to make a fist but not completely. I also learned about grabable objects and raycasting for pointing in VR.

If I develop this game further I definitely want to make it with a score system and fix the pivoting board to be less buggy.